Locally Testing Custom AWS Lambda Runtime Containers with Runtime Emulator Sidecar

Invoke Lambda's without adding the Lambda Runtime Interface Emulator locally or in the container

Given that we are living in the 2020’s, having to install various small utility packages in order to interact with applications and tooling software is quite old fashioned, error prone and non-reproducable.

This has been solved historically with writing documentation that may go out of date / sync with the code or small scripts that achieve the goal but leave artifacts laying around. Not to mention that with the advent of

One such scenario I have encountered recently is when trying to create a custom AWS Lambda Runtime using an custom base image that requires installing aws-lambda-ric and testing it out locally.

Based on the above, on AWS’s recommendation, we need to download and make available the executable of the aws-lambda-rie, an emulator that allows us to invoke via curl the Lambda.

Now the trick is to create a lightweight Docker container with the executable, under the same architecture as your Lambda:

The above will then be leveraged in a local docker-compose.yaml as follows:

The most interesting part to see is that in the above Dockerfile we unpackage and set the executable in /rie-bin, which is a mounted Docker volume on the same path in the docker-compose.yaml. This is then shared at the same time with the Lambda container on the same path, effectively sharing the executable across the containers.

The Lambda container is based on the AWS example here:

Which contains a simple NodeJS Lambda handler:

And it all comes together for a ‘no straggler left behind’ approach as follows:

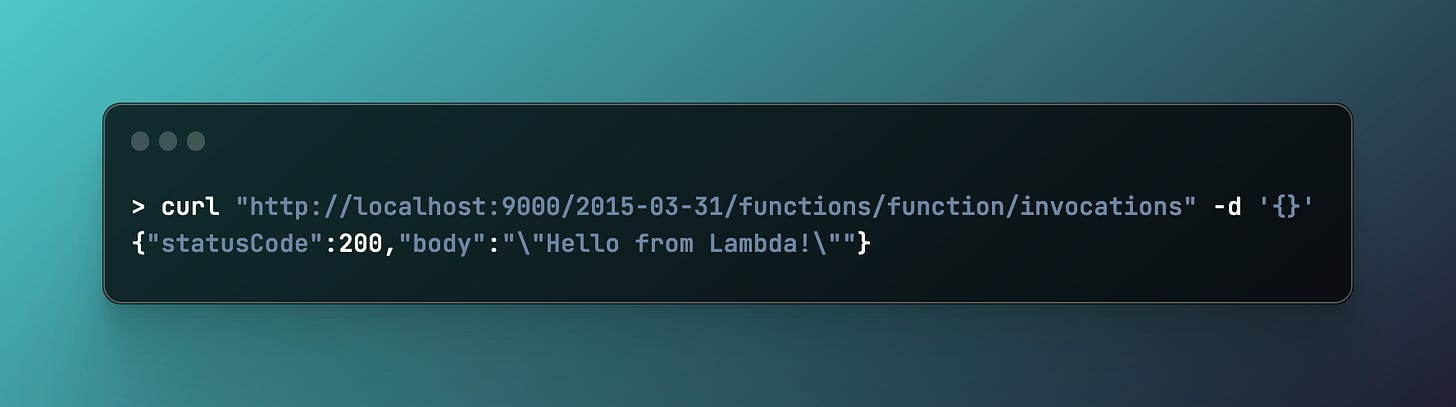

Now from a separate terminal we can use curl to invoke the function:

As we can see we invoked the function on the AWS Lambda standard path and got the response returned from the Lambda once it completes execution. In Terminal 1 where the Lambda is running, we can see the same output we see in CloudWatch Logs when viewing the output:

This is a brief yet powerful example on how to configure sidecars for any dependencies that you require

The full code for having a AWS Lambda Runtime Interface Emulator Sidecar with a custom Lambda Docker runtime can be found here.

While this example is entirely in NodeJS, there is nothing stoping you from having the Lambda container use Go, Rust or any other language with custom configured dependencies.

In conclusion, the use of Docker containers and AWS Lambda Runtime Interface Emulator provides a robust and efficient solution for managing dependencies and testing out custom AWS Lambda Runtimes locally.

This approach not only ensures reproducibility but also eliminates the need for installing multiple utility packages, thereby reducing potential errors. The flexibility of this method allows for the use of various programming languages, making it a versatile tool for any developer's toolkit.

Remember, the key to this process is the effective sharing of the executable across containers using Docker volumes. As we continue to innovate and streamline our development processes, such practices pave the way for more efficient and error-free coding environments.