Working around the AWS ECS Task Definition 65536 byte limit

How to rollout a container with thousands of environment variables within the service's hard limits

The problem

In a recent project in which I undertook the modernization of a legacy monolithic Java application running on EC2 to modern “cattle not pets” in cloud native realm. If you are not familiar with the term, it was coined originally by Microsoft engineer Bill Baker regarding SQL deployments where

Pets

Servers get unique names and special treatment. When they’re “sick”, they’re carefully nursed back to health, often with a significant time and financial investment.

while

Cattle

Individual servers are part of an identical group. Numbers, not names, identify them, and they receive no special treatment. When something goes wrong, the server is replaced, not repaired in place.

After a laborious process that is out of scope for this topic, I finally reached the point of attempting to deploy the service on ECS, only to be welcomed by an error related to the task definition being limited to 65536 bytes.

This was quite the daunting error to encounter at the time as the Java application has gathered nearly a thousand environment variables in the past two decades and overall, due to them, the task definition is over the hard limit of the service. After a brief moment of dread, I set to apply my engineering mindset and find a workaround to this new limitation.

After a brief investigation I concluded that as the environment variables have all been extracted from a multitude of Spring properties files and injected via Terraform(conversion done via a script of course), the total storage required for the content and all of the final resulting JSON with all it’s metadata needed for the Task Definition exceeded the 65536 byte hard limit.

The basic solution

The solution I settled on was to coalesce the property files into fewer, functionality focused, and as part of the Terrafrom deployment, to leverage filebase64 to encode the entire file into a single string that can be stored and keep the Task Definition within the limits. Alternatively, we can also leverage Terraform data.local_file as the .zip does not exist at the initial terraform run point, which would result in an error.

As the containers all have a custom docker-entrypoint.sh file, part of the boot sequence before exec-ing the main application, as part of the shell script, I take the environment variables, decode and place them in files based on the environment variable name, which follows a preset naming convention. In this way an environment variable named FILE_HYSTRIX_PROPERTIES would have the FILE_ prefix dropped, the remainder converted to lower case and “_” replacted with “.” to yield hystrix.properties file with contents base64 decoded.

The shell script to achieve this would could look as follows:

Now having done this we can be in one of the two following scenarions:

Deployment successful, but brittle as we are already near the hard limit of the service and adding an additional trivial amount of variables or simply updating some of them to lengthier values could result in the definition exceeding the limit.

Deployment failed, as we are still over the limit even with these optimizations.

The improved solution

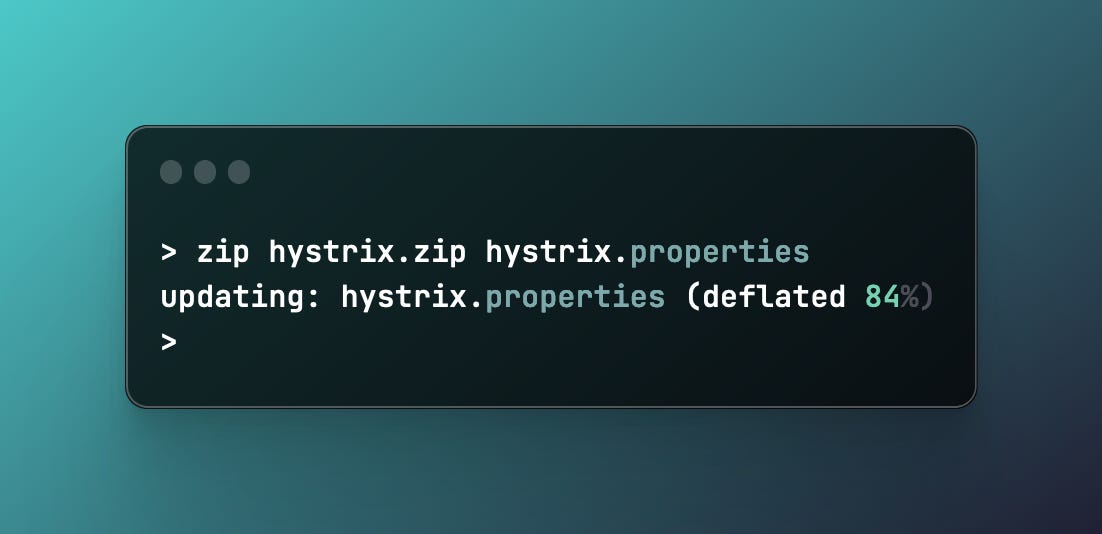

As the properties files are just text files, we can obtain further significant storage gains by also doing a compression of the file before performing the filebase64 / data.local_file.zip_file.content_base64. As such, for each of our files we can do

zip hystrix.zip hystrix.propertiesand reference the “.zip” instead of the raw file in the filebase64 step.

This can yield us significant storage gains as below properties file zip showcases:

In Terraform these steps would look like the following:

This allows us to continue having the raw text files available for editing as opposed to storing them in encoded, zipped format and having to perform

base64 decode

unzip

edit property

zip

base64 encode

As we now also have zip files placed in the environment variables, we also need to expand our docker-entrypoint.sh to handle zipped base64 encoded variables.

The updated scripts looks as follows:

These encoded variables can then be referenced in the environment section of the task definition. If you are using the Terraform module for ECS, the code would look as below:

And as our container has the docker-entrypoint.sh as showcased above, the file will be decoded, unzipped and placed in the desired directory to be consumed by the application.

The optimal solution

While the above steps will significantly reduce the task definition size and most likely suffice for must usecases, if you have several large property files, you will still risk reaching the hard limit quickly, as the entirety of the data is still located within the task definition. The best approach would be to hold the data in a different “container” somewhere on AWS and only hold references to it within the definition specification.

It comes naturally then that the best location to hold these encoded variables would be within SSM Parameter Store(Systems Manager Parameter Store) as an Standard(4KB) or Advanced(8KB) parameter or within Secrets Manager(10KB).

The added benefit of this move is that we increase our security posture as we can apply more stringent IAM permissions on the parameters and secrets, denying read/write permission to them for developers while still allowing view/edit of the task definition itself.

Not only that but the main benefit of this approach is that if we were to have 9 theoretical variables of 8KB in size, the improved solution above would run into the ECS service limit of 65535 bytes. Moving them into SSM as an “Advanced” parameter, we would circumvent that limitation and be able to store countless more variables.

Immense gains for a very small change in the Terraform script from above:

Conclusion

In navigating the complexities of modernizing a legacy Java application for deployment on AWS ECS, the journey from encountering a daunting task definition limit to innovating a viable solution underscores the essence of cloud engineering: adaptability and problem-solving. The initial workaround, involving encoding and compressing environment variables, showcased creative engineering to fit within stringent constraints. However, the true breakthrough came with the optimization of utilizing AWS SSM Parameter Store and Secrets Manager to externalize configuration data, demonstrating a strategic pivot towards leveraging cloud-native services for scalability and efficiency.

This experience not only illustrates the technical challenges inherent in migrating to cloud-native architectures but also highlights the importance of embracing cloud services to enhance application deployment and management. By moving from a direct embedding of environment variables in the task definition to storing them in AWS's managed services, we not only circumvent the limitations of ECS but also gain the benefits of security, manageability, and scalability provided by AWS.

Such a journey is a testament to the evolving nature of cloud computing, where limitations are not roadblocks but rather catalysts for innovation. It emphasizes the need for continuous learning, experimentation, and adoption of cloud-native solutions to overcome challenges. As organizations continue to modernize their applications, this example serves as a reminder of the power of cloud services to transform application deployment strategies, ensuring they are both scalable and resilient in the face of changing technical landscapes.

In conclusion, the path from a constrained legacy application to a streamlined, cloud-native deployment is fraught with challenges. Yet, it is precisely these challenges that drive the innovation and strategic thinking necessary to leverage the full potential of cloud computing. By adopting a mindset that views limitations as opportunities for optimization, organizations can navigate the complexities of modernization with confidence, ensuring their applications are not only compatible with the cloud but are also positioned to take full advantage of its capabilities.

The example codebase for this can be found here.

It contains a minimal example ECS cluster with a task definition of a custom image built on top of alpine. It copies the custom docker-entrypoint.sh and sets the command that will be executed after the scripts completion, which in our case will be to print the contents of the /tmp/hystrix.properties, which is where we have CONFIG_DIR pointig to (/tmp) and where our local hystrix.properties should be placed.

You can run it via

terraform init && terraform apply -auto-approve

Once it completes, we can check the logs which outputs the /tmp/hystrix.properties from the container by running

aws logs tail /aws/ecs/EngineeringMindscape-Task-Limits-DEV/example

which should output the following: